The Ultimate Guide to Software-Defined Memory (SDM): Everything You Need to Know

Kove Insights

In this, our ultimate guide to software-defined memory, we answer some of the key questions technologists are asking about this pivotal, breakthrough technology.

Memory demand is exploding. The rise of AI/ML — combined with an increase in edge computing, containerization and other memory-hungry considerations — has contributed to more stress on enterprises, their technologists and their infrastructure. The latter is struggling to scale at the same rate — especially on artificial intelligence and machine learning jobs. But software-defined memory (SDM) can help solve the problem almost immediately, because SDM allows memory to move where and when it’s needed, with no new hardware required. So what is software-defined memory, and what makes this high performance computing solution so beneficial to organizations looking to scale their dynamic memory allocation needs?

What is Software-Defined Memory?

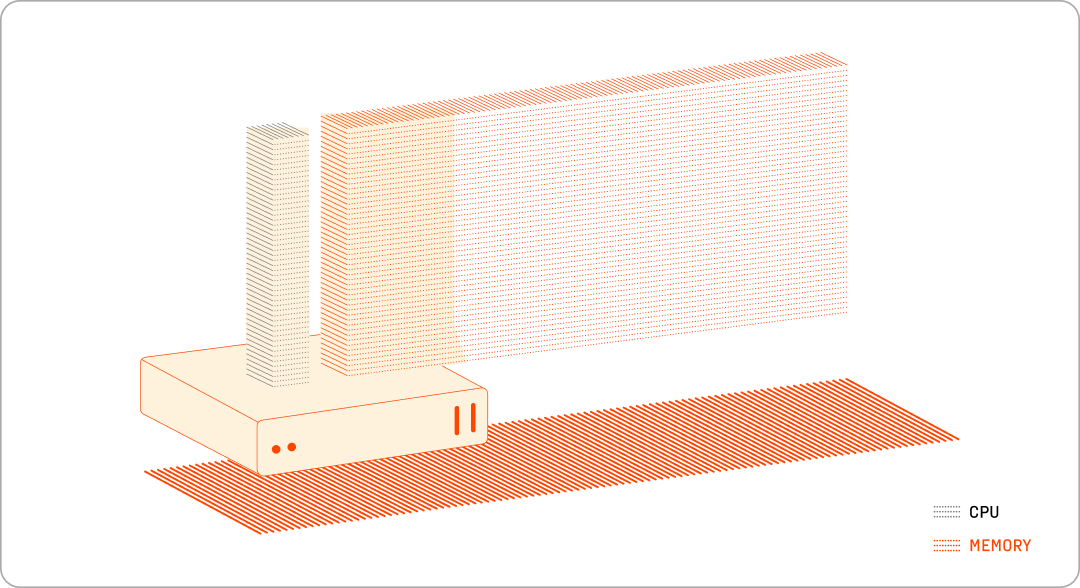

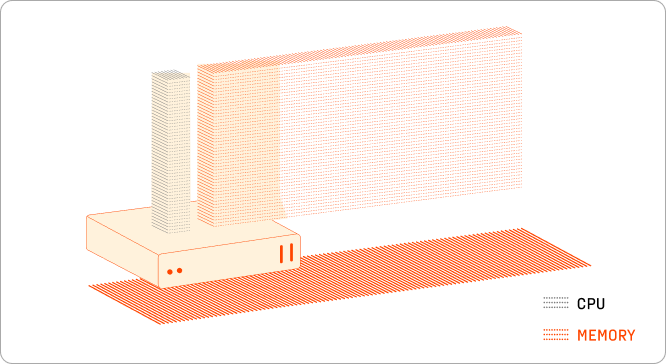

Software-Defined Technologies (SDT) is the management and optimization of virtualized resources, including storage, computing, networking, and of course, memory. Virtualized memory — a solution only possible with Kove® Software-Defined Memory (Kove:SDM™) — enables individual servers to draw from a common memory pool, receiving exactly the amount of memory needed, including amounts far larger than can be contained within a physical server.

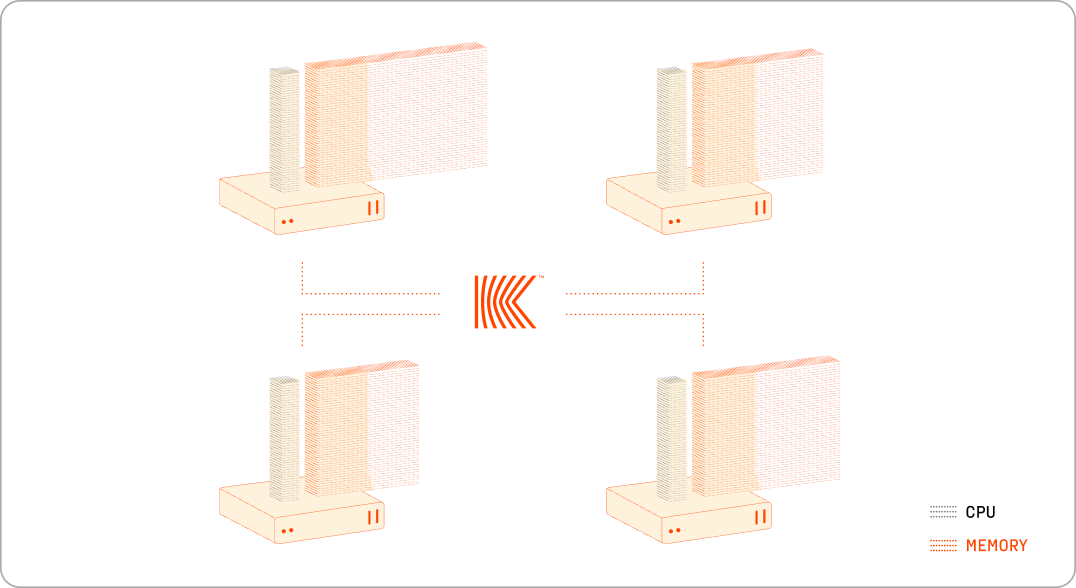

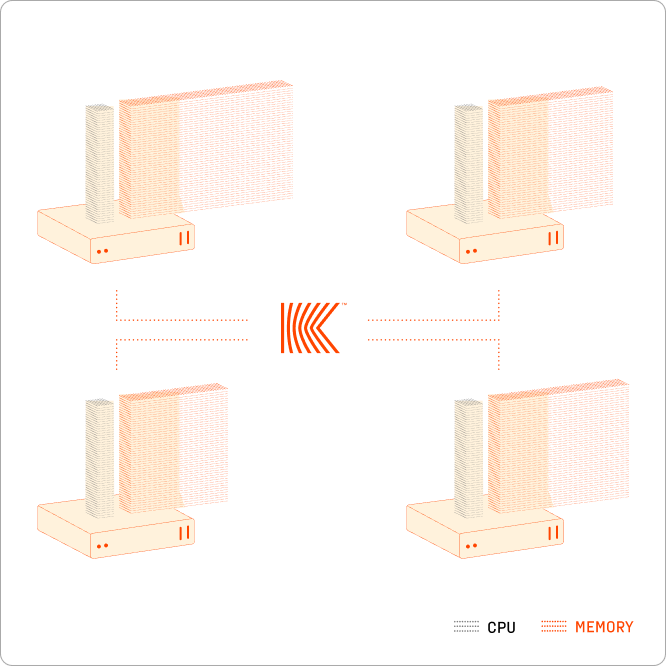

This kind of memory pooling is available as a scalable solution now, only through SDM. When your current job is completed, memory returns to the pool and becomes available for use by other servers and jobs, increasing your memory utilization. In this way, enterprises require less memory in individual servers and less aggregate memory, because it is using memory more strategically on-demand, where and when it is needed.

Kove:SDM™ gives any server the flexibility to meet any need, configured on-the-fly exactly for the job at hand. With policies, customers can dynamically configure smaller footprints into precision servers and larger servers into any size server for any workload need. No more wasting memory. You can use exactly what you need, anywhere, anyhow — big, small or ever changing.

How Has Memory Management Evolved?

Software-defined memory has long been considered a long shot — with many even saying that it was impossible. Traditional memory management was thought of in terms of maximizing CPU memory on a per server basis. Few thought of the capacity of a physical server as a limitation that could be overcome. For decades, the mindset was that memory was subject solely to server size and speed, distance of cable and other similar constraints.

Despite the obvious benefits of requiring less overall server memory, technical challenges kept software-defined memory and memory virtualization unrealized, even as various initiatives tried to achieve it. A common approach was hardware-based Symmetric Multiprocessing (SMP) and then vSMP (software SMP). These did not, however, effectively scale cache-coherency, and performance degraded as memory moved farther away from the CPUs. Kove resolved these issues with its patented Kove:SDM™, which doesn’t have cache coherency scaling challenges and enables memory to be located hundreds of feet away from the CPUs without affecting predictable, well-understood, real local-memory performance.

Kove:SDM™ decouples memory from servers, pooling memory into an aggregate, provisionable and distributable resource across the rack or across the data center using unmodified Commercial-Off-The-Shelf (COTS) hardware. Like a Storage Area Network (SAN) provisioning storage using policies, Kove:SDM™ delivers a RAM Area Network (RAN) that provisions memory using policies. Kove:SDM™ provides a global, on-demand resource exactly where, when, and how it is needed. For example, an organization might:

- Use a Kove:SDM™ policy to allocate up to 2 TiB of need-based memory for any of 200 servers between 5p-8:30p.

- Temporarily upgrade eight 32 GB memory blade servers into 256 GB servers for temporary burst computation.

- Develop a provisioning rule that would provision a virtual machine with larger memory than the physical hypervisor, such as a 64 GiB RAM physical server (hypervisor) hosting a 512 GiB RAM virtual machine, for only a few minutes.

- Provision a 40 TiB server for a few hours or a 100 TiB RAM disk with RAID backing store for a temporary burst ingest every morning.

- Dynamically create variable virtualized servers for just about any use case for which they can imagine a need.

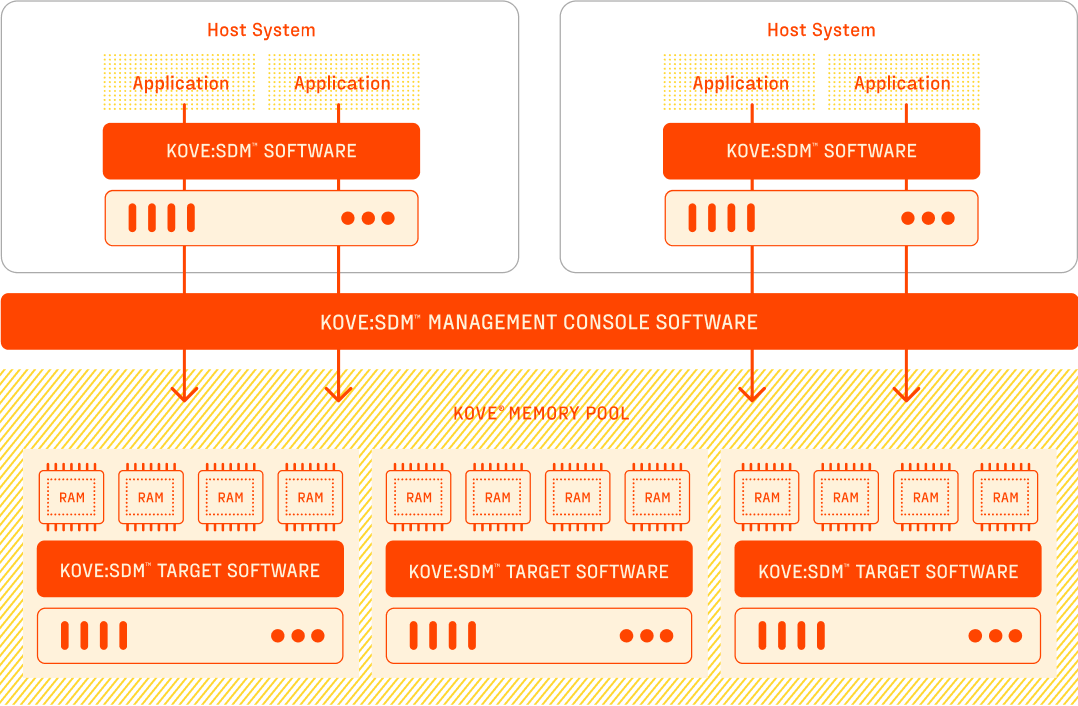

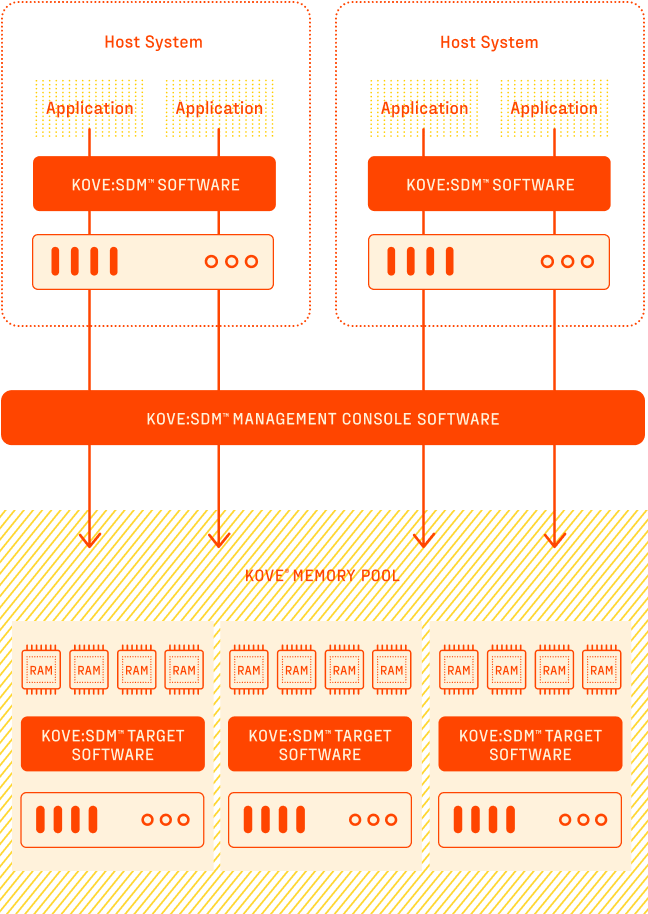

Kove:SDM™ works by using three transparent software components:

- A Management Console (MC) that orchestrates memory pool usage.

- The Kove® Host Software that connects applications to a memory pool.

- Kove® XPD® Software converts servers into memory targets to form a memory pool. Users and applications do not ever need to know that it is present.

If memory virtualization follows the same pattern as prior virtualizations in storage, compute and networking, it will be the software-defined side of the equation that produces groundbreaking gains vs. possibly meaningful but lesser gains seen in hardware design.

What Are Some Advantages of SDM?

As data sets grow larger — and AI/ML, Rich Media, IoT and Edge Computing move from luxuries to essentials — memory flexibility has become the single greatest obstacle to achieving transformational growth. Software-defined memory conquers that challenge. SDM allows customers to size memory big, small, dynamically, anywhere, anytime, however and whenever needed, on whatever hardware, for whichever workload.

For enterprises implementing Kove:SDM™, a few key advantages quickly emerge.

Performance Upgrades

No new or specialized servers need to be purchased, and Kove:SDM™’s performance is based on memory determinacy, not on CPU performance. Customers can extend the use of the servers they already own, even servers with outdated CPUs and ancient memory, converting them into fully functional data center memory. In fact, Kove has customers actively using servers as memory targets for >13 years.

Energy Savings

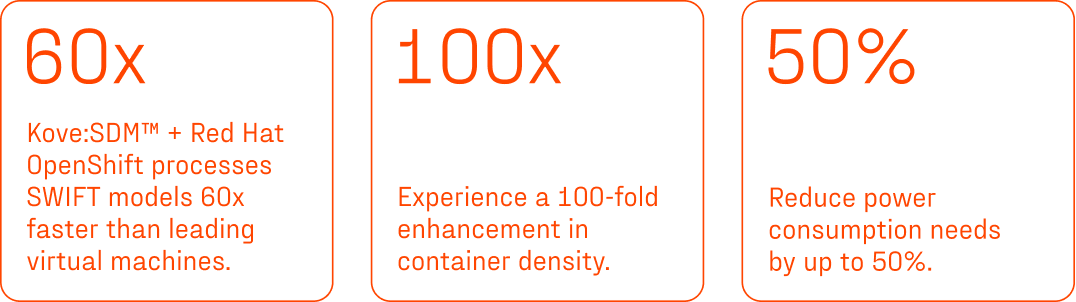

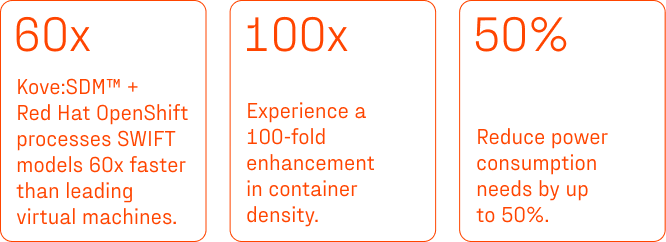

Implementing Kove:SDM™ can result in 25-50% less energy consumption. The breakthrough solution reduces power, heat and cooling needs — and your overall footprint — so you can better meet your organization’s environmental and sustainability goals. The resulting increase in utilization of each individual server means fewer servers are required to achieve the same level of work. That, in turn, cuts down on energy needs, eliminates future hardware waste and maximizes performance, all while delivering more efficient processing.

Cost Reductions

Not only does implementing Kove:SDM™ help organizations achieve more, but it’s also affordable. Enterprises can save money because they will not need to pay for as much memory as before by better utilizing the servers that they do have. As a result, customers can amortize memory costs across all servers. This reduces per-server costs and servers needed, as well as associated operating costs, including software, personnel and more. Customers can experience an ROI in the 50-80% range, not counting the OpEx savings they also realize.

Kove’s approach to software-defined memory delivers deterministic low latency and high CPU utilization. With Kove:SDM™, users receive local memory performance while transparently using virtualized memory from even hundreds of feet away. With policies on how the memory pool is used, customers can create custom server definitions on-the-fly, for whatever performance requirements they need or have. Imagine creating server definitions on-the-fly that you can’t even buy.

What Applications Benefit Most from SDM?

AI/ML jobs frequently suffer from memory constraints, but with Kove:SDM™, users can right-size memory to need, providing faster time-to-solution and better models. On the Edge, customers can achieve data center performance with fewer resources and limited power. With in-memory databases, you can analyze any data size or computational need, no matter how large. With containers, technologists can run more jobs in parallel on a single server, increasing workload capability up to 100x. In high-performance computing, Kove:SDM™ enables unlimited memory, so users can store and analyze massive amounts of data in memory to speed up processing. For enterprise and cloud providers, data can be moved closer to CPUs and GPUs, from across the rack to across the data center, meaning you can easily grow your capacity to meet demand or workload.

What Are the Most Successful SDM Solutions In-Market?

Kove is the first and only company to get customers over the memory wall with Kove:SDM™ — the only product in-market that can achieve the performance gains and sustainability goals Kove:SDM™ makes possible. And the best part? It installs in minutes, with no new hardware or code changes needed.

Kove:SDM™ enables enterprises and their leaders to finally maximize the performance of their people and their infrastructure. Then, in data centers, it offers the ability to scale memory linearly across all the resources that you have as opposed to merely inside of individual servers. Plus, it works with the same performance properties as local memory, with flexibility that’s impossible to achieve in physical servers.

Enterprises can stop waiting around for the promised hardware advances of CXL and start seeing immediate performance results. There’s so much more that can be done in memory that isn’t hardware embedded, and that potential gives software a huge advantage. Software-defined approaches end up winning markets after obsoleting dedicated hardware approaches. Kove lets you skip a generation of expensive and messy hardware upgrades and costs, and go directly to software-defined efficiencies. This happened with storage virtualization, CPU virtualization, networking virtualization, and Kove:SDM™ allows you to achieve this with memory virtualization.

How Does SDM Get Implemented?

Kove:SDM™ is implemented on commercial off-the-shelf (COTS) hardware. It utilizes two network fabrics:

- Ethernet for the Control Plane (i.e., command-and-control).

- InfiniBand for the Data Plane (i.e., memory data transfer). InfiniBand is used by Kove:SDM™ exactly as it was designed: for memory. No special modifications are required by Kove:SDM™ to use Ethernet and InfiniBand. Kove engineers anticipated that a plurality of fast “memory interconnects”, such as PCIe, Photonics, RoCE, Slingshot, and others would be introduced when designing Kove:SDM™ and plan to support new memory interconnects as they become available.

Kove:SDM™ requires zero code changes, so applications can run out of the box without changing code. What’s more, Kove:SDM™ provides developer APIs with kernel bypass for even faster performance. Provisioning control applies to all access methods and use cases. Kove:SDM™ uses an open systems architecture and standard Ethernet, servers and GPUs, so no hardware modifications are needed.

What Are Some Real Life Examples of SDM In-Use?

SWIFT is the financial backbone of the payments world. Roughly the equivalent of the global GDP flows over the Swift network in every three-day span, and in those circumstances, where the stakes couldn’t be higher, Kove:SDM™ is Swift’s preferred software-defined memory solution.

To keep those transactions safe, secure and speedy, Swift partners with Kove to create a pool of software-defined memory. Empirical test results have shown that Kove enables Swift to achieve 60x performance improvement on model training compared to a virtual machine running the same job on the same hardware.

Unlimited memory is possible right now. The results are real, the impact on businesses is immediate and significant. With software-defined memory, Kove solved an enormous technological hurdle that was thought to be unachievable.

For years, forward-looking leaders who know what they want to achieve have lacked the tools — and the dynamic memory allocation capabilities — to get there. Until now.

Kove:SDM™ gives enterprises the memory size and performance they need when and where they need it, and allows leaders to do things they could not have done before.

To learn more about how Kove is helping enterprises like yours put their memory limitations behind them and achieve more than they ever thought possible, check out the News & Ideas section now.

Want to learn more about software-defined memory or ready to start your SDM journey now? Let’s talk!